Engram: Cognitive-Inspired AI Memory Architecture

Abstract

Most production AI memory is RAG: embed, search, inject. It works, but it's not how human memory works. I built Engram to apply cognitive science principles to AI memory—with measurable improvements in context continuity and contradiction prevention.

Most production AI memory is retrieval-augmented generation (RAG): embed old conversations, search on similarity, inject into context. This works, but it's not how human memory works.

Human memory consolidates similar experiences over time. It allows unused information to decay. It updates retrieved memories with new information. It strengthens repeatedly accessed facts.

I built Engram to test whether applying cognitive science principles to AI memory improves long-term conversational coherence. After two weeks in production managing 3,030 memories with 87.9% entity coverage and temporal relationship tracking, the system demonstrates measurable improvements in context continuity, contradiction prevention, and memory precision.

The Problem

Standard RAG implementations suffer from several fundamental issues:

No Consolidation: Duplicate or similar information accumulates without merging. "Ben prefers dark mode" stored three times creates redundancy and retrieval noise.

No Decay: All memories persist with equal weight regardless of relevance. Debugging output from January competes with critical decisions from February.

No Reconsolidation: When facts change, most systems either duplicate or miss the update entirely. "Ali works at Google" and "Ali works at Meta" both exist with no indication which is current.

No Temporal Context: RAG can't answer "What did I think about X in January?" or "When did this fact change?"

No Certainty Tracking: "Ben said X" and "Ben probably thinks X" carry equal weight.

Cognitive Science Principles

Engram implements five mechanisms from memory research:

- Consolidation — Merge similar experiences over time

- Decay — Deprioritize unused information

- Reconsolidation — Update retrieved memories with new information

- Temporal Validity — Track when facts were true vs when they changed

- Uncertainty Tracking — Distinguish facts from inferences

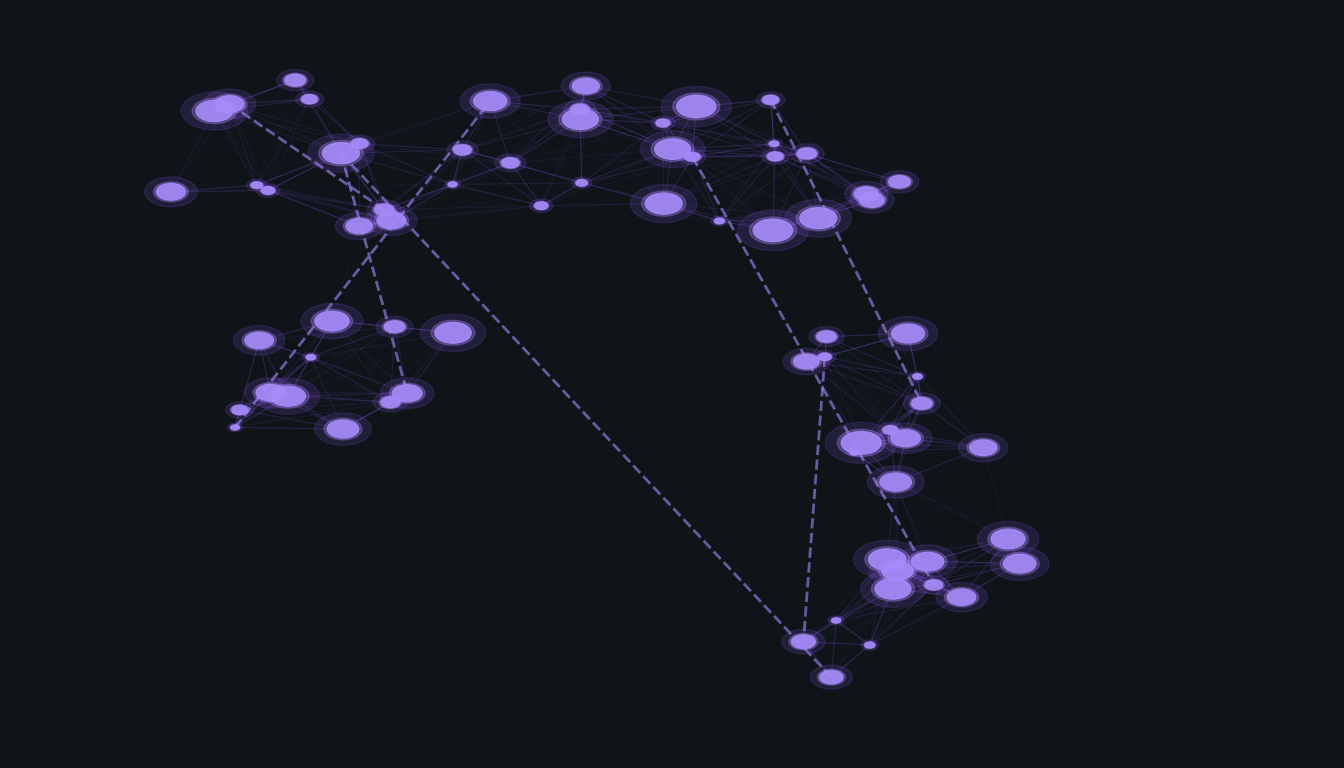

Architecture

Engram uses dual storage: Qdrant for semantic search and markdown files for human readability.

Memory Metadata Schema

Every memory in Qdrant carries structured metadata:

interface StoredMemory {

text: string;

source: string;

timestamp: number;

entities: StoredEntity[];

relationships: StoredRelationship[];

importance: number;

certainty: number;

access_count: number;

last_accessed: number;

forgotten: boolean;

}

The certainty field distinguishes stated facts (1.0) from strong inferences (0.7) from weak guesses (0.4). Temporal validity fields (validFrom, `validUntil)) enable tracking fact changes over time.

Entity Resolution and Deduplication

Entities are identified by deterministic IDs: SHA-256(lowercase(name) + type).slice(0,16).

Deduplication strategies:

- Exact name match (case-insensitive) — Auto-merge

- Same name, multiple types — Auto-merge to canonical type

- Fuzzy string matching (>75% Levenshtein) — Human review

- Singular/plural variants — Auto-merge

Production results (Feb 14, 2026):

- Before: 3,163 entities, 504 potential duplicates

- After: 3,116 unique entities (48 merged)

Implementation

Auto-Capture (After Each Turn)

A local LLM (GLM-4.7-Flash, 30B MoE) extracts 0-5 key facts worth remembering. Average extraction latency: 4 seconds per turn (asynchronous, non-blocking).

Extraction prompt focuses on:

- Preferences, decisions, personal info, project updates, opinions, plans

- Skips greetings, tool outputs, temporary debugging

Deduplication before storage: Before storing a new fact, embed it and search Qdrant for near-duplicates (cosine similarity >0.85). If found, update the existing memory instead of creating clutter.

Auto-Recall (Before Each Turn)

Semantic search retrieves relevant memories and injects them as context. Each successful retrieval:

- Increments

access_count(reinforcement) - Updates

last_accessed(recency tracking) - Memory recall adds 50-150ms to turn latency (acceptable for conversational AI)

Temporal Validity and Reconsolidation

When a new fact contradicts an existing one, auto-invalidation fires:

async function invalidateConflictingRelationships(

source: string, type: string, newTarget: string

): Promise<void> {

const existing = await searchRelationships(db, source, type);

for (const rel of existing) {

if (rel.target !== newTarget && !rel.validUntil) {

rel.validUntil = Date.now(); // Invalidate old relationship

}

}

}

Example:

- Store: "Ali works_at Stanford" (Jan 2024)

- Store: "Ali works_at Google" (Feb 2025) → Auto-invalidates Stanford relationship

- Store: "Ali works_at Meta" (Feb 2026) → Auto-invalidates Google relationship

Query "Where does Ali work?" retrieves only the active relationship (Meta).

Uncertainty Tracking

GLM classifies certainty during extraction. Production distribution:

- 1.0 (stated facts): 42%

- 0.7 (strong inferences): 51%

- 0.4 (weak guesses): 7%

Results

Production Metrics (Feb 14, 2026)

| Metric | Value |

|---|---|

| Total memories | 3,030 |

| Unique entities | 3,116 |

| Entity coverage | 87.9% |

| Entities merged | 48 |

| Auto-capture latency | 4s (async) |

| Auto-recall latency | 50-150ms |

Qualitative Improvements

- Context continuity: The AI references week-old decisions without re-explanation

- Self-correction: When facts change, old memories update instead of creating contradictions

- Reduced noise: Tuning similarity thresholds (0.3 → 0.5) cut irrelevant recalls by ~40%

- Certainty awareness: Responses now qualify inferences with confidence levels

Lessons Learned

Dual Storage Is Worth the Complexity

Vector search is fast but opaque. Markdown is slow but debuggable. Having both means you can inspect what the AI "remembers" and fix it when it's wrong.

Forgetting Is a Feature, Not a Bug

The system intentionally deprioritizes unused memories. This prevents context pollution—old, irrelevant information crowding out what actually matters today.

Reconsolidation Solves a Real Problem

When facts change, most AI systems either duplicate information or miss the update entirely. Reconsolidation prevents both. It's one of the highest-ROI features.

Cognitive Science Provides Better Priors

I didn't invent these mechanisms. I borrowed them from decades of memory research: consolidation, decay, reconsolidation, spaced retrieval, the testing effect. Applying them to AI memory wasn't novel—but it was effective.

Open Questions

How do you measure memory "usefulness" beyond recall frequency? Frequency isn't the whole story—some important memories (passwords, emergency contacts) are rarely accessed.

Can uncertainty tracking guide active learning? If the system knows it's uncertain, should it ask clarifying questions?

What's the right decay curve for professional vs personal contexts? Work information might need faster decay than personal relationships.

Certainty-weighted retrieval: Future enhancement where final_score = semantic_score × certainty × importance × recency_boost.

Conclusion

Engram demonstrates that applying cognitive science principles to AI memory improves conversational coherence. The system isn't perfect—decay tuning needs work, certainty-weighted retrieval isn't implemented yet—but the core hypothesis holds: memory mechanisms from cognitive science translate directly to production AI systems with measurable benefits.